My wife is a veterinarian. Her website is not "brand storytelling." It's a tool. People check opening hours, find the address, and call when their pet is in trouble, usually from a phone and usually in a rush.

Her old site looked fine at first glance, but the usual cracks were there: it was slower than it needed to be, awkward to update, and boxed in by the limits of a website builder. (And yes, a monthly fee for the privilege.)

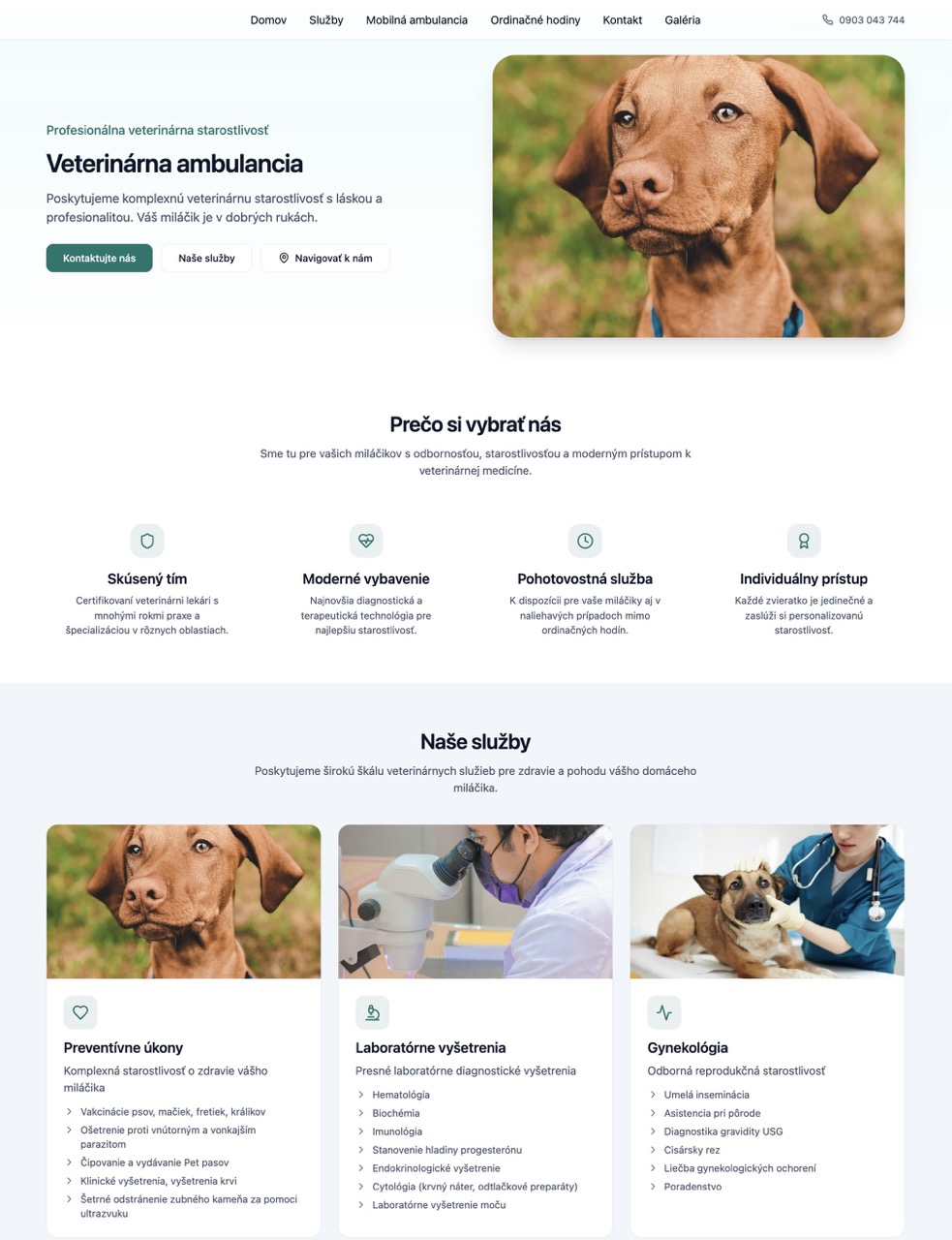

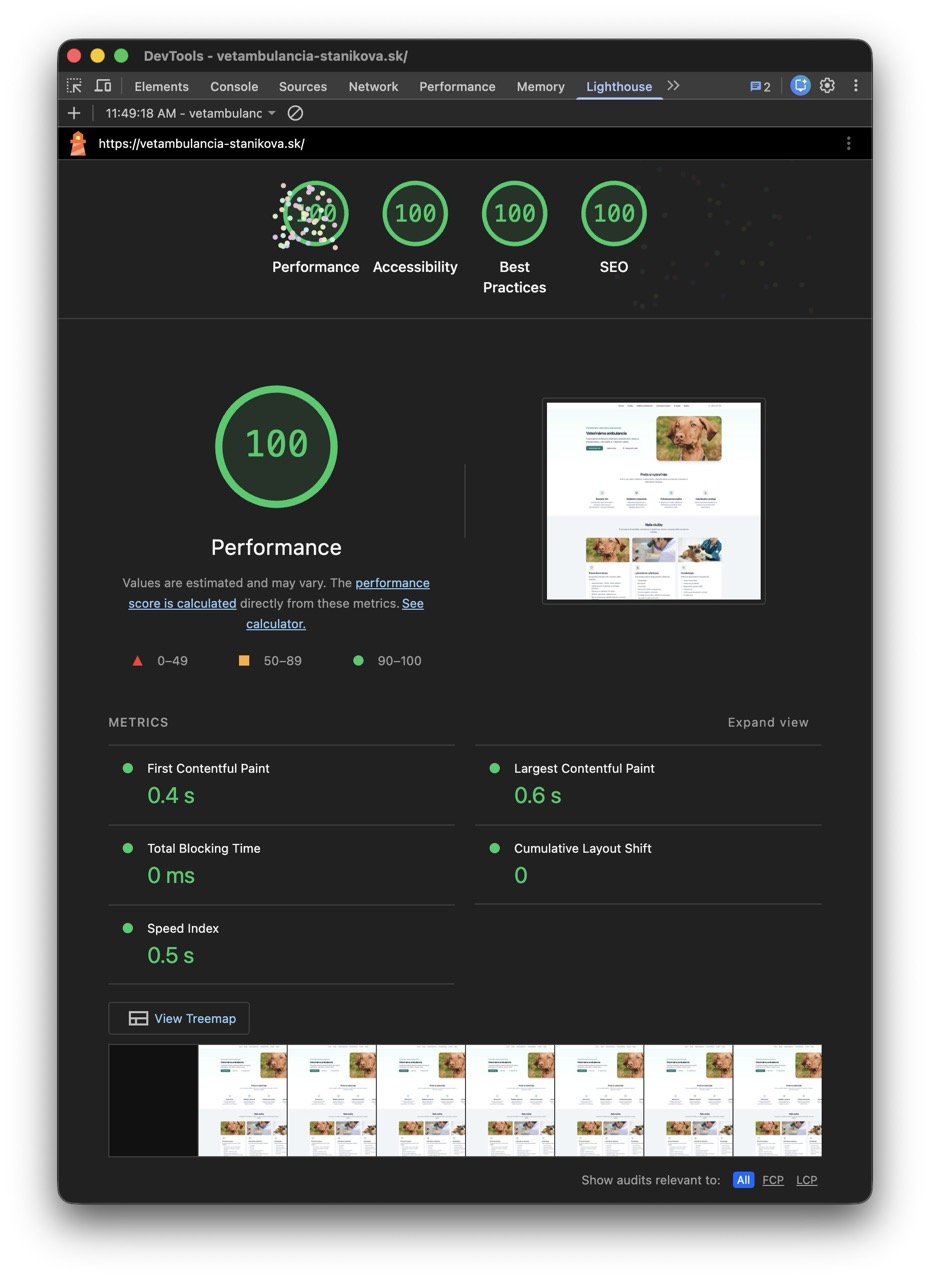

So I rebuilt it in one evening. The result was boring in the best way: responsive, accessible, SEO-ready, and 100/100/100/100 in Lighthouse - matching perfect quality of experience standards for the visitors.

What I actually did

I did not start from a blank page. I used the old site as the spec because the structure and content were already validated by real users.

Then I recreated the design in Figma Make, iterated a few rounds, and turned the result into a real codebase using Cloud Code + Codex. From there, it was a straightforward build with Deno + Fresh 2 and a deploy to Deno Deploy.

Total time was around three hours.

Why the Lighthouse score was high

After I finished the design and code I checked the Lighthouse report. Surprisingly - most of the high score came out of the box. The initial run was already in the high 90s. Getting to 100 was a bit of cleanup, not days of tweaking.

Performance was mostly about well-known restraint: minimal JavaScript, clean assets, and no random third-party scripts. Accessibility came from semantic HTML and boring basics like labels, keyboard support, and visible focus states. SEO was just crawlable markup and sane metadata. "Best practices" came from the same discipline: HTTPS, stable layout, no console mess.

What still bugs me is that plenty of businesses pay serious money for "optimization" packages that promise roughly the same result for small brochure sites, often built in WordPress.

The uncomfortable business takeaway

If a production website like this can be rebuilt in three hours, pricing gets weird very quickly.

This is most visible in software because the output is easy to ship and measure. But it does not stop there. The same squeeze is spreading to services around software (design tweaks, SEO cleanups, analytics setup, content moves) and even to everyday office work (docs, spreadsheets, proposals, email).

In software, it shows up first in places like admin dashboards, internal tools, CRUD-heavy modules, integration layers, and simple SaaS surfaces.

No, software is not "free now." But delivery is cheaper and faster now, and old vendor models are getting hard to defend.

What buyers should ask for in 2026

Stop buying hours. Buy outcomes with acceptance gates.

A practical baseline is weekly demos of working software, CI gates (tests, linting, type checks), performance and cost budgets, security hygiene (dependency scanning, secrets handling), basic observability where it matters, and a handover standard that a new engineer can run in under 30 minutes.

If AI speeds up execution, standards should go up. Not down.

The responsible way to use AI

AI is excellent at drafting, scaffolding, refactors, test generation, and docs. It is still weak at judgment.

So keep humans on product decisions, architecture boundaries, and verification. Then enforce quality gates so "looks good to me" is never the release process.

This is also new for non-technical leaders. A CEO can take a vendor update, an architecture diagram, or a list of tickets and ask for a plain-language translation: what changed, what it enables, what might break later, and what to watch. That makes it easier to judge progress and challenge costs that do not match delivered outcomes.

Final point

This project was small, but it matches what I keep seeing elsewhere. Execution is faster than it used to be, and buyers should expect more for the same budget.

That does not mean squeezing teams until they break. It means reducing scope where needed and refusing to lower quality bars.