The Context

For most of the last 50 years, building software meant mastering a specialist language. Not English or Slovak, but the dialects of frameworks, APIs, build tools, and patterns. If you could speak those dialects fluently, you could instruct a computer. If you couldn't, you were locked out.

LLMs change that.

They do more than "help you code." They compress the gap between intent and implementation. Programming is still a language, but now we have a near-instant translator that moves back and forth between:

- Human intent (what you want the system to do)

- Machine-executable specifications (code, tests, configs, infra)

That matters because it makes software creation accessible in a way we simply didn't have before. A non-programmer can describe a workflow and get a functioning version of it. A solo builder can ship an idea in hours. A small team can iterate at a pace that used to take a much larger engineering org.

Here's the blunt claim: LLMs have largely solved the "typing code" part of programming. The bottleneck has moved.

What still separates hobby scripts from production software is not whether you can generate code. It's whether you can:

- Choose the right constraints and UX for humans

- Define boundaries so the system stays coherent as it grows

- Verify correctness (tests, edge cases, validation)

- Ship and operate it (deployments, observability, failure modes)

AI is high-gain: clear intent and constraints produce clean output; vague intent produces confident junk. So yes, more people can build software now. But experienced engineers still matter because they provide architecture, product judgment, and safety rails.

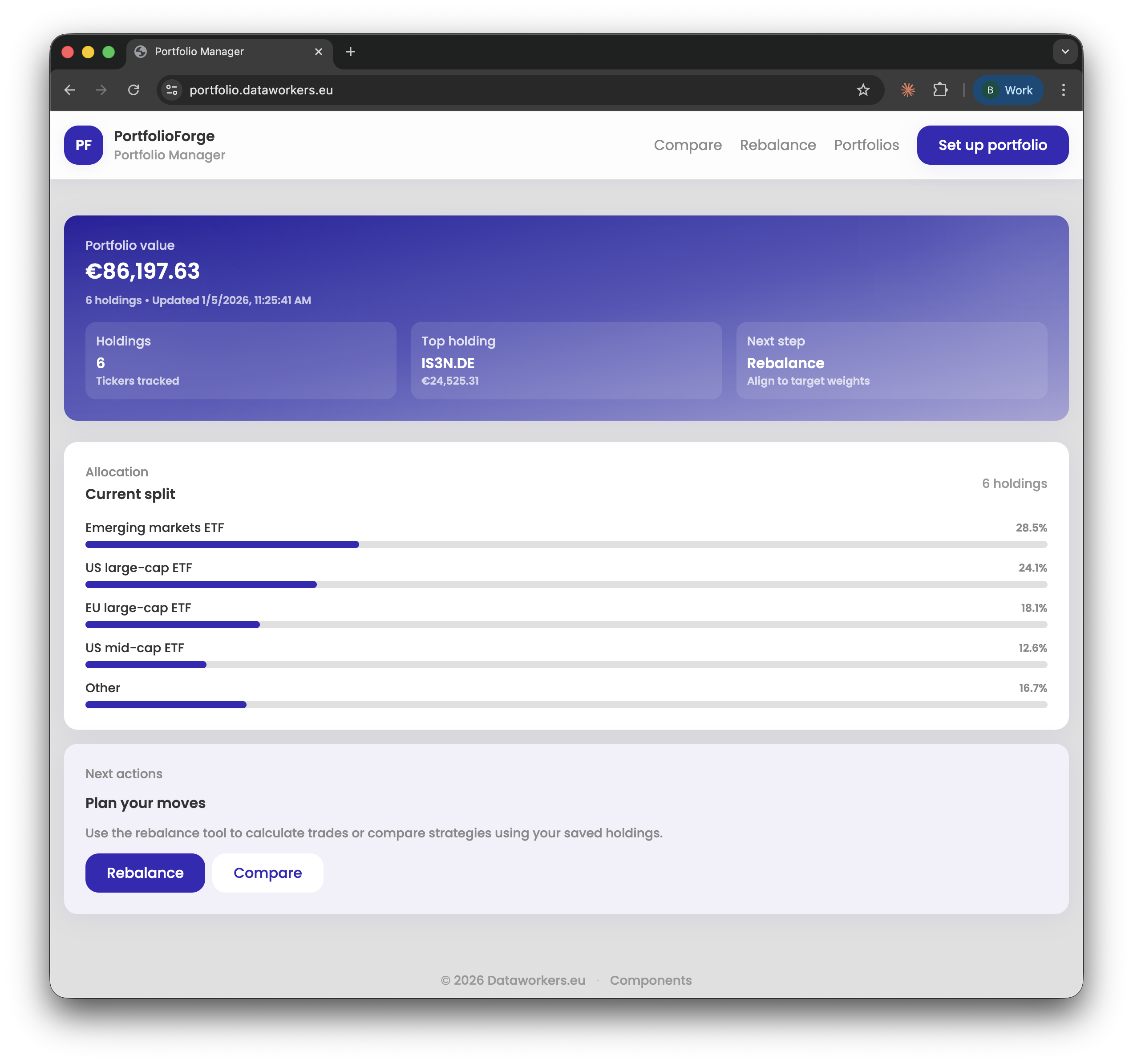

Portfolio Manager is a small web app I built in one evening to validate a practical workflow: compare ETF strategies and generate a whole-share rebalancing plan before buying.

The point wasn't to build a perfect finance product. It was to prove something more general:

- Can a fuzzy idea become a usable flow in hours, not weeks?

- Can a design kit turn into a clean component system without a heavy front-end stack?

- Can AI remove the biggest UX friction: importing messy portfolio data?

Live app: https://portfolio.dataworkers.eu

The Brief

Build a small tool that lets a user:

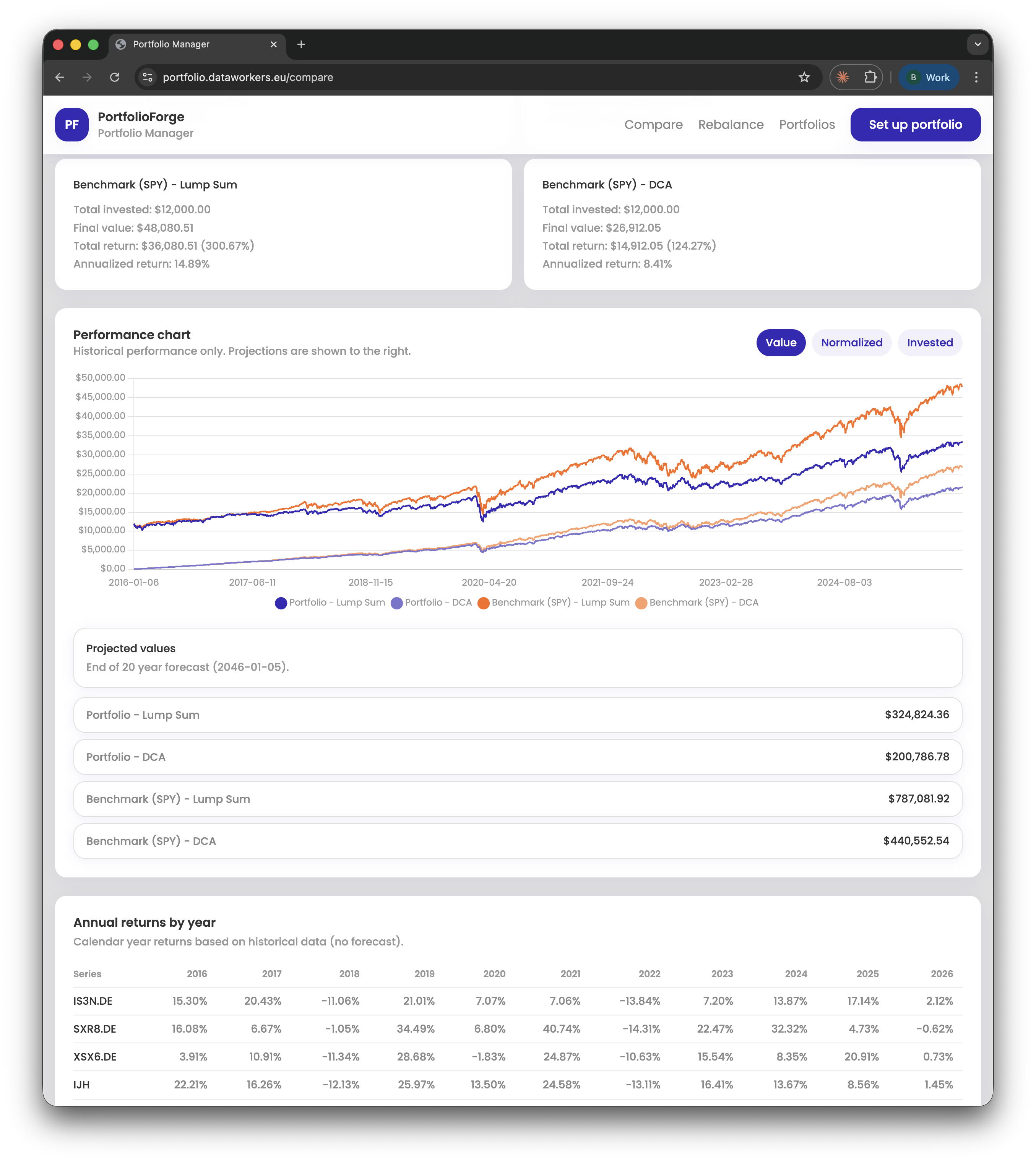

- Compare lump-sum investing vs. monthly DCA for a custom ETF blend

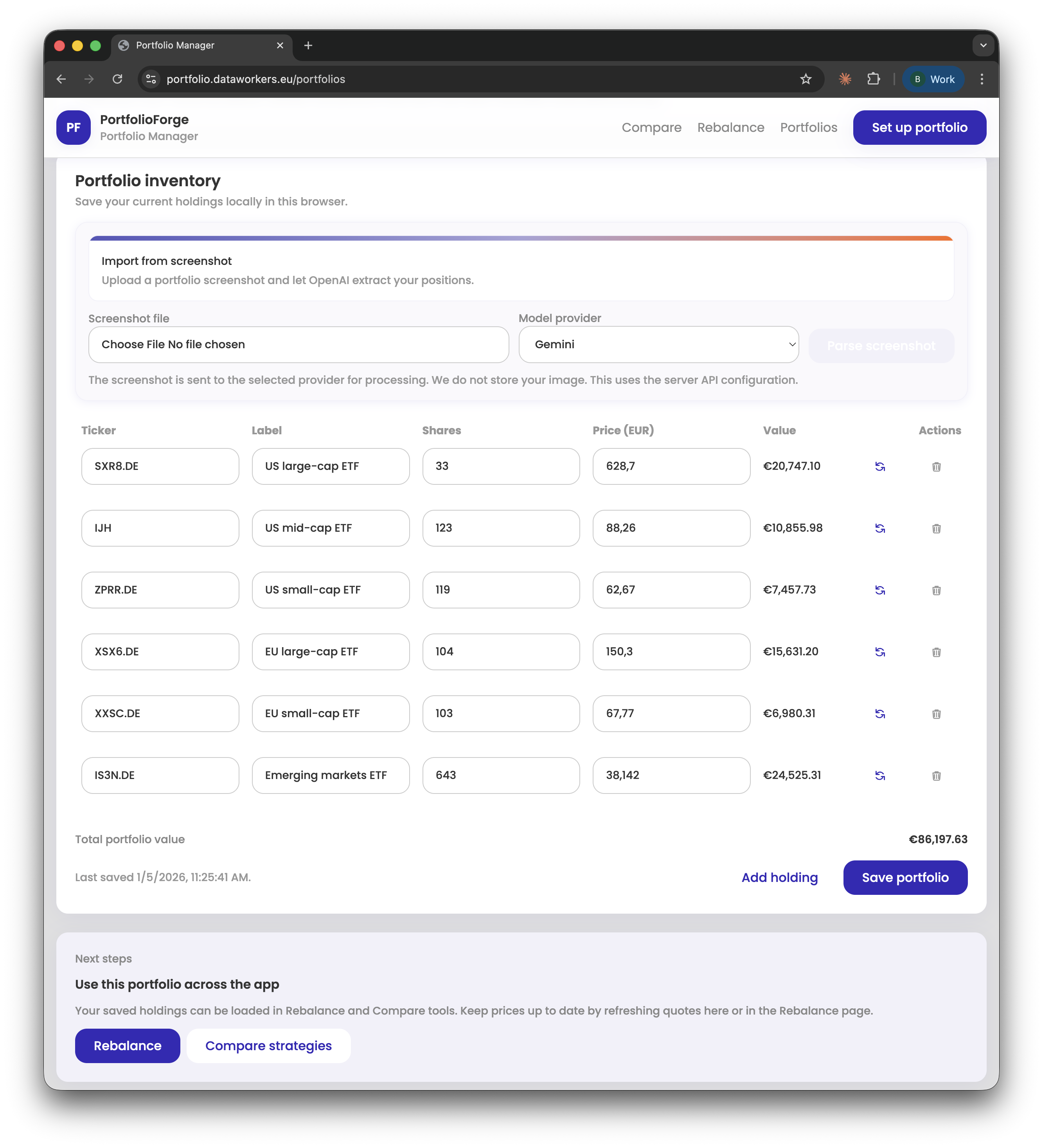

- Save a portfolio inventory (tickers, shares, prices) locally for reuse

- Upload a broker screenshot and extract holdings with an LLM, then review

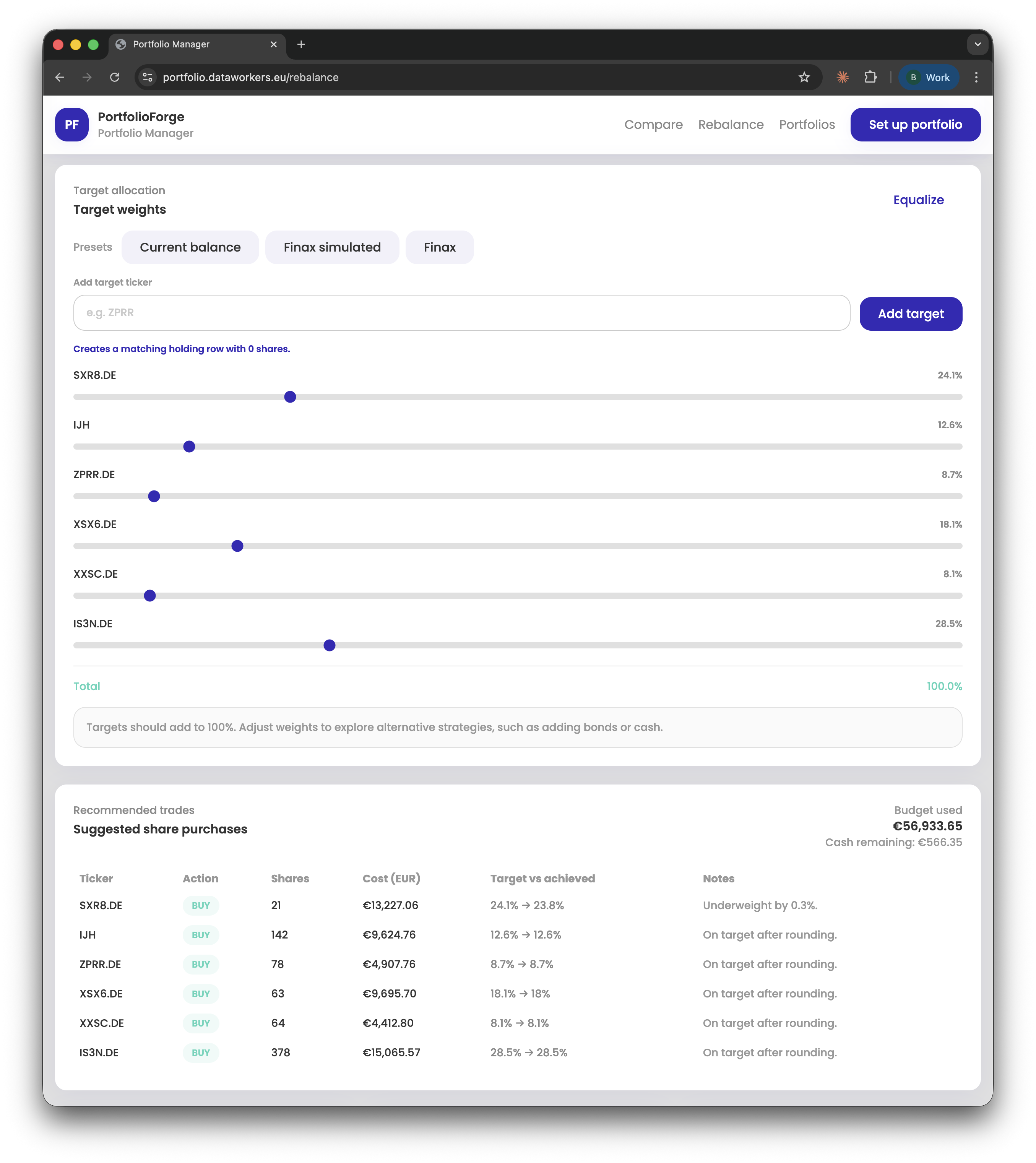

- Generate a whole-share rebalancing plan toward target weights

The twist: I had never used Deno before.

The Approach

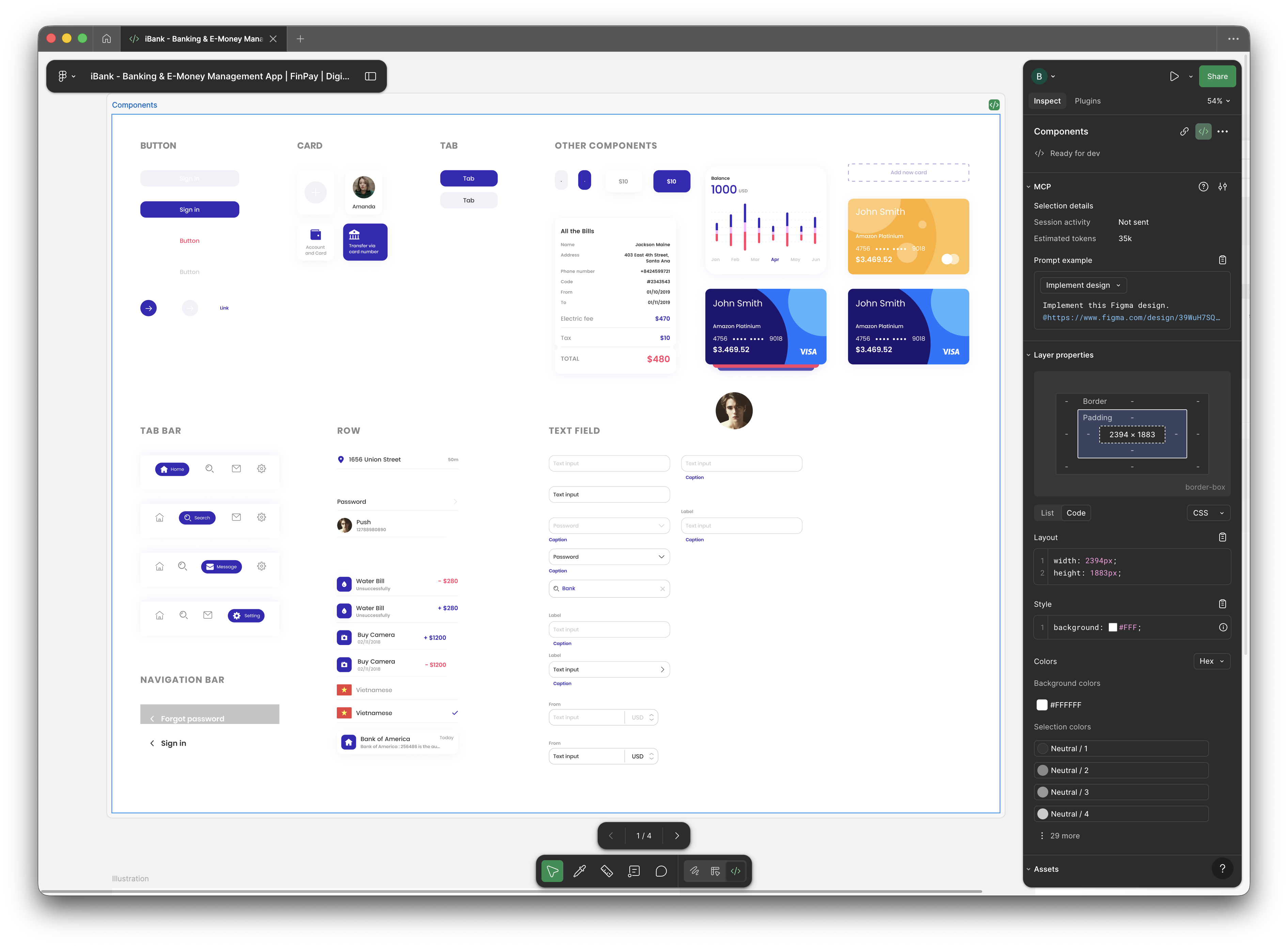

1) Design-first -> component system

The UI started from a free Figma design kit. Instead of pixel-matching one-off screens, I translated the kit into a small set of reusable, server-rendered components (buttons, cards, inputs, tabs, navigation).

This creates two benefits:

- The UI stays consistent even as features change rapidly

- Iteration is cheap. New pages are mostly composition, not custom CSS

I also built a /components route as a quick visual audit for the design tokens

and primitives used across the app.

2) Fresh islands architecture (ship less JavaScript)

Fresh makes it easy to keep most of the UI server-rendered and only ship client JavaScript where interactivity matters:

- Strategy charts and interactive comparisons

- Allocation controls and rebalancing planner

- Screenshot import review flow

That keeps the app lightweight while still feeling dynamic.

3) AI-assisted screenshot import (pragmatic, not magical)

The most painful UX in portfolio tools is data entry. Instead of forcing users to type tickers and shares manually, the app supports uploading a broker screenshot and extracting holdings via OpenAI or Gemini.

The flow is designed as "AI as draft":

- Extract -> show a review screen -> user corrects -> then save

- No blind auto-import into the portfolio state

That design principle is what makes AI safe in real apps: LLM output is an input to a human workflow, not an unquestioned source of truth.

4) Build fast with LLMs as the translator layer

This project was developed with AI coding assistants only: OpenAI Codex (Codex CLI) and Claude Code.

The important point is not "AI wrote the app." The role of the developer shifts from typing code to directing a system.

In practice, the division of labor looked like this:

- I defined the product intent, UX constraints, and architecture boundaries

- The LLMs translated that intent into working Fresh/Preact/Tailwind/Deno code

- I enforced consistency, corrected blind spots, and pushed refactors until the codebase stayed coherent

This is the leverage clients care about: shorter cycles from hypothesis to working software without surrendering control.

Results

In about seven hours I shipped a working, deployed app that demonstrates:

- End-to-end delivery (idea -> UI system -> features -> deployment)

- A concrete example of "LLM + product UX" that removes real friction (portfolio import)

- A clean Deno + Fresh codebase, despite this being my first Deno project

The point is not finance software. It's proof that with the right constraints, software can move much faster and still ship as a maintainable product.

What this demonstrates (for clients)

- Speed without chaos - shipping fast is easier when you start from a design system and keep architecture simple.

- AI as an interface layer - use LLMs where they remove boring work (like data capture), then wrap them in safe UX (review + correction).

- Outcome-driven prototyping - build the smallest app that answers the question, then scale only after it proves value.

- Engineering judgment still matters - LLMs accelerate output, but they do not define the problem, validate decisions, or guarantee correctness.

Tech Stack Snapshot

| Area | Choice |

|---|---|

| Runtime | Deno 2 |

| Web framework | Fresh 2 |

| UI | Preact |

| Styling | Tailwind CSS |

| Market data | Yahoo Finance |

| Screenshot extraction | OpenAI or Gemini (optional) |